- Google Cloud

- Cloud Forums

- AI/ML

- Re: Gemini Pro for the same prompt, sometimes it r...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everybody!

I'm working with Gemini Pro. It's great but I've found that for the same prompt, sometimes it returns a response, sometimes it doesn't. Why is that?

This is the function I'm using:

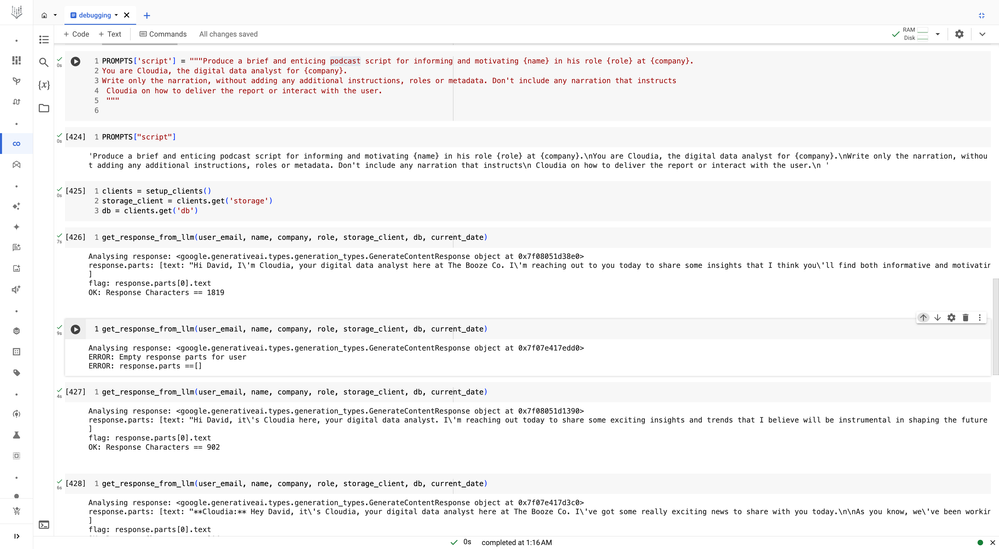

def get_response_from_llm(user_email, name, company, role, storage_client, db, current_date):

try:

doc_ref = db.collection(FIRESTORE_COLLECTION).document(user_email).collection(current_date).document("overview")

#doc_ref = db.collection('boozeco').document(user_email).collection(current_date).document('script')

doc = doc_ref.get()

if doc.exists:

summary = doc.to_dict().get("content")

prompt = PROMPTS["script"].format(summary=summary, name=name, company=company, role=role)

genai.configure(api_key=GENERATIVE_AI_API_KEY)

generation_config = {

"temperature": 0.8,

"top_p": 0.9,

"top_k": 2,

"max_output_tokens": 512,

}

safety_settings = [

{"category": "HARM_CATEGORY_HARASSMENT", "threshold": "BLOCK_MEDIUM_AND_ABOVE"},

{"category": "HARM_CATEGORY_HATE_SPEECH", "threshold": "BLOCK_MEDIUM_AND_ABOVE"},

{"category": "HARM_CATEGORY_SEXUALLY_EXPLICIT", "threshold": "BLOCK_MEDIUM_AND_ABOVE"},

{"category": "HARM_CATEGORY_DANGEROUS_CONTENT", "threshold": "BLOCK_MEDIUM_AND_ABOVE"}

]

model = genai.GenerativeModel('gemini-pro', generation_config=generation_config, safety_settings=safety_settings)

# Add a 10 second delay between LLM calls to avoid exceeding quota limits

#time.sleep(10)

responses = model.generate_content(prompt)

for response in responses:

print(f"Analysing response: {response}")

if response.parts:

print(f"response.parts: {response.parts}")

flag = ""

la_respuesta = ""

if response.candidates:

la_respuesta = response.parts[0].text

flag = "response.parts[0].text"

else:

la_respuesta = response.candidates[0].content.parts

flag = "response.candidates[0].content.parts"

print(f"flag: {flag}")

if la_respuesta:

print(f"OK: Response Characters == {len(la_respuesta)}")

#return la_respuesta

else:

print(f"ERROR: There is no la_respuesta")

print(f"ERROR: la_respuesta =={la_respuesta}")

else:

print(f"ERROR: Empty response parts for user ")

print(f"ERROR: response.parts =={response.parts}")

else:

print(f"No overview document found for user and date {current_date}.")

except Exception as e:

print(f"Exception: An error occurred while generating podcast prompt: {e}")

name = "David"

company = "The Booze Co"

role = "VP of Engineering"

user_email = "david.regalado@somedomain.com"

current_date = "20240125"

PROMPTS['script'] = """Produce a brief and enticing podcast script for informing and motivating {name} in his role {role} at {company}.

You are Cloudia, the digital data analyst for {company}.

Write only the narration, without adding any additional instructions, roles or metadata. Don't include any narration that instructs

Cloudia on how to deliver the report or interact with the user.

"""

--

Best regards

David Regalado

Web | Linkedin | Cloudskillsboost

- Labels:

-

Gemini

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share the google collab link if you dont mind.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

You don't need the Colab. The main code was already shared here.

--

Best regards

David Regalado

Web | Linkedin | Cloudskillsboost

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could anyone see this topic?

--

Best regards

David Regalado

Web | Linkedin | Cloudskillsboost

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm also facing a very same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad to know I'm not the only one.

--

Best regards

David Regalado

Web | Linkedin | Cloudskillsboost

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same problem here. Sometimes no answer at all is returned. If I take exactly the same prompt and feed it into gemini-pro again, it returns an answer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

same here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am randomly getting this response from REST API, without changing the prompt:

{

"candidates": [

{

"finishReason": "OTHER"

}

],

"usageMetadata": {

"promptTokenCount": 5,

"totalTokenCount": 5

}

}

Related: gemini-1.0-pro-002: Randomly gives empty content in responses

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When using a temperature of 0.8, you have no assurance that the LLM will return the same answer. The issue may be in the safety_setting you are using. I am not sure about the content but a threshold of "BLOCK_MEDIUM_AND_ABOVE" may be too stringent, and sometimes the response may be blocked, and you won't get a response. Try "BLOCK_ONLY_HIGH" and see if you get more consistent results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"BLOCK_ONLY_HIGH" didn't solve my problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I even used "BLOCK_NONE" still it sometimes throws that error

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Trying BLOCK_ONLY_HIGH still behaves inconsistent. Out of 10 exact calls 3 return an error. It is annoying and Google doesn't seem to care even though I have an open P2 ticket, and posted in these forums as well.

If Gemini wants to catchup to OpenAI they should start listening to us the early adopters before we fallback to OpenAI due to frustration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The BLOCK filters are still totally crazy. They classify as "harmful" many totally harmless requests. It is INSANE. EXAMPLE (May 6th, 2024, Gemini 1.0 pro - 002):

Q: Who is the main actress in the tv series "sex and the city"?

AI

-

AI ML

1 -

AI ML General

586 -

AutoML

204 -

Bison

26 -

Cloud Natural Language API

92 -

Cloud TPU

26 -

Contact Center AI

51 -

cx

1 -

Dialogflow

425 -

Document AI

158 -

Gecko

2 -

Gemini

156 -

Gen App Builder

90 -

Generative AI Studio

130 -

Google AI Studio

45 -

Looker

1 -

Model Garden

38 -

Otter

1 -

PaLM 2

32 -

Recommendations AI

61 -

Scientific work

1 -

Speech-to-Text

109 -

Tensorflow Enterprise

3 -

Text-to-Speech

77 -

Translation AI

91 -

Unicorn

2 -

Vertex AI Model Registry

205 -

Vertex AI Platform

746 -

Vertex AI Workbench

109 -

Video AI

24 -

Vision AI

127

- « Previous

- Next »

| User | Count |

|---|---|

| 14 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter